AI as a Force Multiplier for Small Platform Teams

How AI coding assistants amplify engineering judgment

January 27, 2026

The Opportunity

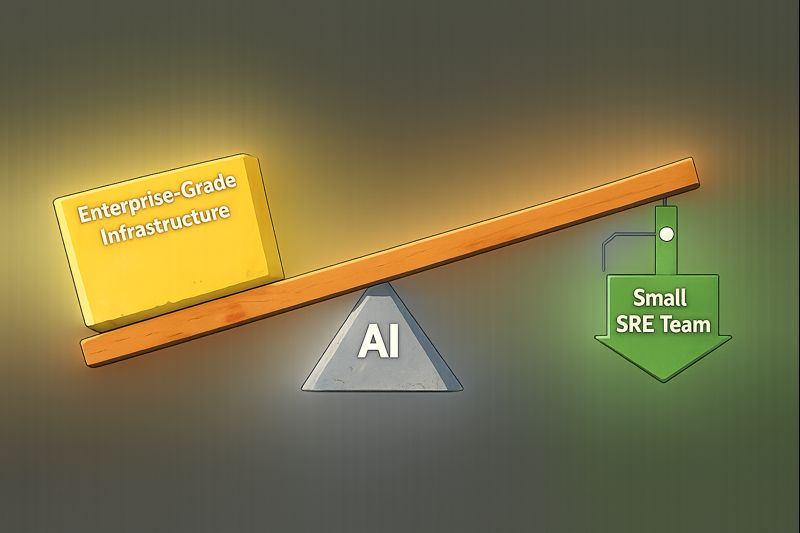

In our small but growing platform team at Orbital, we’ve discovered something powerful: AI coding assistants are game-changers for infrastructure engineering at scale.

We’re not just talking about faster code completion. We’re talking about fundamentally changing how small teams deliver enterprise-grade infrastructure by leveraging AI tools as virtual team members.

Here’s what this looks like in practice: Recently, we needed to query our entire GitHub organization for Dependabot security alerts. Using AI tools, I explored multiple implementation approaches in parallel—something that would traditionally take days or weeks. Within hours, I had working prototypes using different tools and could make an informed decision based on actual running code, not theoretical arguments.

This is the core insight: AI tools don’t replace engineering judgment—they amplify it by letting you explore the solution space faster.

We’re hiring! If you’re an SRE who wants to work with AI tools as force multipliers and help us build a world-class platform team, we’d love to talk. Read on to see how we’re approaching this challenge.

Why AI Tools Are Essential for Small Platform Teams

Here’s the reality of working in a lean platform team: We manage a broad surface area—Kubernetes clusters, CI/CD pipelines, security compliance, infrastructure-as-code, on-call rotations, developer support, and cost optimization. This is the exciting challenge of working at a growth-stage startup: wide scope, high impact, rapid iteration.

Every decision has an attention cost that compounds over time. This makes tool selection critical.

This is where AI tools become force multipliers. As Simon Willison points out, our role has fundamentally shifted:

We’re no longer primarily code writers. We’re code validators.

AI tools let me:

- Rapidly prototype multiple approaches in hours instead of days

- Explore design trade-offs without committing weeks to the wrong path

- Test and validate each approach with actual running code

- Make informed decisions based on proven solutions, not theoretical arguments

The key difference: Instead of spending days writing one approach and hoping it works, I spend hours generating multiple approaches, proving they work, and choosing the best one.

AI tools help you answer the question faster—but you’re still responsible for proving the answer is correct.

How AI Tools Amplify Small Team Capabilities

We’re intentionally running a lean platform team. This means we need to be strategic about how we allocate attention and where we create leverage.

Our small team often needs to handle work that might traditionally be split across:

- Platform team: Building and maintaining infrastructure

- Enabling team: Researching tools and supporting developers

- Stream-aligned team: Delivering features that require infrastructure expertise

AI tools as force multipliers: AI coding assistants effectively expand our team’s capabilities by acting as:

- Research partners who can explore multiple implementation approaches simultaneously

- Implementation accelerators that can scaffold solutions in Python, Bash, Terraform, or other tools

- Pair programmers who maintain velocity without sacrificing thoughtfulness

This isn’t about replacing humans—it’s about amplifying what skilled engineers can deliver.

We’re growing intentionally: As a scaling startup, we’re actively hiring SREs to expand the platform team. AI tools aren’t a substitute for great engineers—they’re what lets our current team punch above our weight while we build out the organization.

Working lean with AI amplification actually reinforces good habits: when you have AI to rapidly prototype alternatives, you develop a discipline of exploring options before committing. These patterns scale beautifully as the team grows.

How AI Accelerates the Build vs Buy Decision

With an AI pair programmer, I can explore the full decision tree in hours instead of weeks:

Can a managed service do this?

- If yes: Pay for it. Your time is more expensive than the service.

- Examples: managed Kubernetes, managed databases, managed CI/CD

Can existing CLI tools orchestrate this?

- If yes: Don’t build what already exists—compose existing tools.

- Examples:

gh,kubectl,az,aws,terraform

Is this complex enough to need a proper application?

- If yes: Prototype to validate the approach

- Examples: Custom operators, complex business logic, APIs

Does building this create competitive advantage?

- If no: You probably shouldn’t be building it at all

- Go back to step 1

The AI advantage: I can ask Claude Code to implement multiple options in the same afternoon. Compare them. Understand the trade-offs. Make an informed decision based on working code.

As an SRE in a lean team, I optimize aggressively for managed services and existing tools. Every service I run myself requires monitoring, patching, and maintenance. Every custom application is maintenance debt. Choose what you own carefully.

Engineering Principles in Action (Amplified by AI)

Engineering principles aren’t just platitudes—they’re decision-making tools. AI tools make them more powerful. Two of our team’s product engineering mantras in particular guide how I approach problem-solving:

Fight with the Weapons You Have 🏹

“Success comes from using the people, tools, and knowledge available to hit our goals, even in less-than-ideal conditions.”

As an SRE in a lean team, my most precious resource isn’t compute or storage—it’s cognitive bandwidth. Every new dependency is a future maintenance burden. Every abstraction layer is mental overhead when debugging under pressure.

AI tools are now one of the weapons I have. They’re installed, available, and ready to use today.

The key insight: AI tools let me explore more options faster, then choose the right one for the task based on actual working implementations.

The question isn’t “what’s the best tool?” It’s “what’s the best tool that we already have and can maintain while juggling everything else?”

For the security scanner, this meant comparing a full-featured application with comprehensive testing against a simpler script that orchestrates existing CLI tools. AI tools let me build both in hours and make an informed choice based on our team’s constraints.

Ship Early and Often 🏎️

“Lightweight MVPs beat over-engineered ‘perfect’ builds. The faster we build–measure–learn, the faster we win.”

With Claude Code as a pair programmer, I can ship faster and learn more. Instead of spending days building one approach and hoping it’s the right one, I can explore multiple approaches in hours—each with working code I can test and validate.

This is the force multiplier. AI doesn’t just help you ship—it helps you ship the right thing by letting you explore alternatives at a speed that would be impossible manually. Multiple working prototypes in the same afternoon means decisions based on proven functionality, not theoretical arguments.

Matching Tools to Problems

The value of AI tools isn’t that they help you write more code—it’s that they help you choose the right tool for each problem by letting you explore alternatives quickly.

For simple orchestration tasks, composing existing CLI tools often wins. For complex business logic, applications with strong typing and testing win. For long-lived services used by multiple teams, robust frameworks win.

The skill is knowing which is which. AI tools help you develop this skill by making exploration cheap. Instead of committing to an approach based on intuition, you can build working prototypes and make decisions based on proven functionality.

Unix Primitives as First-Class Citizens

There’s a deeper principle at work here. Anthropic designed their agent framework around Unix primitives as first-class citizens. As noted in the Claude Agent SDK workshop:

“The Bash tool is often the most powerful tool for an agent.”

Why? Because giving agents access to composable tools like grep, jq, and git means they can solve novel problems by combining existing utilities—exactly how human developers work.

Our own VP of AI, Matt Westcott, makes this argument in “Give Your LLM a Terminal”:

“We should give LLMs access to the tools that humans find effective for their work.”

Unix utilities were purpose-built for text manipulation—exactly where language models excel. These tools solve real problems without requiring custom integrations.

General-Purpose Over Specialized Tools

Instead of building custom integrations for every task, both Anthropic and our AI team bet on giving AI access to the Unix ecosystem. This enables:

- Flexible problem-solving through composition

- Leveraging battle-tested tools

- Avoiding the trap of building what already exists

- Replicating how humans actually work

This philosophy guided our security scanner implementation: compose existing CLI tools rather than building custom HTTP clients and JSON parsers.

Questioning “Production-Ready”

It’s easy to default to familiar patterns: use the language you know, add comprehensive testing, implement proper architecture. But production-ready for what?

AI tools help you step back and ask: Does this problem actually need that complexity? They make it cheap to explore simpler alternatives before committing to frameworks and abstractions.

The SRE Perspective: Optimize for Maintainability

When you’re in a small team maintaining infrastructure, what matters most is:

Mental Load — Can you understand this solution quickly when you need to modify or debug it?

Maintenance Burden — How much ongoing attention does this require? Dependency updates? Version conflicts?

Debugging Under Pressure — Can you trace through the logic when something breaks?

Onboarding Others — Can a new team member understand this without deep architecture knowledge?

AI tools help you explore these trade-offs with real implementations rather than hypotheticals.

In Part 2, I’ll share the practical frameworks and proof systems we use to work with AI agents as teammates—including our pragmatic decision framework, validation practices, and how to build an SRE agent practice that scales.

If you’re an SRE who appreciates pragmatic thinking, understands how AI tools amplify engineering velocity, and wants to help us build a proper platform team as we scale, we’re hiring.

Further Reading

- Code Proven to Work - Simon Willison - Essential reading on the role shift from code writer to code validator

- Claude Agent SDK Workshop: Unix Primitives as First-Class Citizens

- Give Your LLM a Terminal - Matt Westcott (Orbital VP of AI)

- Team Topologies: Organizing Business and Technology Teams

- We’re hiring SREs