The Road to Autonomy

From Thought to Action: The Rise of AI Agents

June 7, 2025

We’re hiring! Explore our Product Engineering Guide to learn how we build at Orbital.

Today’s Large Language Models (LLMs) can generate code that rivals experienced developers, analyse complex legal contracts in minutes, and draft compelling content. Yet for all their brilliance, they’re trapped in a simple loop: you prompt, they respond.

This keeps them confined to single-exchange tasks. But the most valuable work doesn’t fit into one interaction. It requires planning, gathering information from multiple sources, reasoning through complex findings, and delivering complete solutions autonomously.

This is where AI agents come in.

This article builds on concepts from From Reflex to Reason: How AI Learns to Think, which explains AI models, Base LLMs and Reasoning LLMs. I recommend reading it first to understand the foundations of what makes autonomous agents possible.

Contents

From Thinking to Doing

Passive chatbots to digital colleaguesInside Orbital’s Agentic System

A coordinated team of AI specialists transforming property lawMy Role: Building Agentic Systems

Building systems that evolve with AI breakthroughsThe DNA of an AI Agent

The three essential capabilities that make agents workThe Autonomy Benchmark

From 20 minute tasks to year-long projects

From Thinking to Doing

There’s plenty of debate over the precise definition of an AI agent, but that’s mostly noise. Put simply, an AI Agent is a digital colleague that you can delegate meaningful tasks to, much like you would a human colleague.

For example, you might give an AI travel agent the dates you’d like to go away, your preferred climate, and a budget. It could then research suitable destinations for that time of year, find convenient flights, arrange accommodation to your liking, and return with a detailed itinerary asking for your confirmation.

The crucial difference isn’t just what the agent does, it’s how it works. Unlike typical LLM interactions where you guide each step, agents operate autonomously, working independently. You set the goal, then step back. The agent handles the research, reasoning, and decision-making independently, only returning when the work is complete or clarification is needed.

This isn’t hypothetical. There are many publicly available agents that are incredibly impressive and gaining real traction. Want a complete research report on any topic available on the open web with citations? Try OpenAI’s Deep Research. It’s extraordinary. Need to build a compelling web application but can’t code? Replit and Loveable make it possible by simply describing what you want in plain English.

Agents represent the fundamental shift from thinking to doing: AI that doesn’t just respond to prompts, but completes entire workflows autonomously.

Inside Orbital’s Agentic System

At Orbital, our product Copilot acts as a AI real estate lawyer, used primarily by law firms to simplify and accelerate property due diligence, an often complex, mundane, and expensive process. Rather than a single AI doing everything, Copilot is an agentic system: a coordinated team of AI agents, each specialising in different tasks.

Users upload legal documents associated with a real estate deal, then make requests in plain English. These could be anything from summarising key risks to comparing a lease against industry standards or drafting a due diligence report.

Our Orchestration agent receives these requests and creates a plan, determining which specialist agents to deploy and in what sequence. Our Summary agent tackles document analysis end-to-end. Our Retrieval agent searches through the uploaded files for specific passages that answer nuanced questions. Our Research agent provides insights into industry standards and compares scenarios against established practices.

This flexibility is key: instead of relying on static, hard-coded workflows, lawyers can describe exactly what they need in natural language, effectively doing the programming themselves. The orchestration agent handles the complex, multi-step planning and coordination behind the scenes. The result is an incredibly powerful, adaptable, and autonomous system.

My Role: Building Agentic Systems

As an AI engineer building these systems at Orbital, creating effective agentic systems is like assembling a specialist team, where each agent is powered by a LLM with different strengths: some great at analysis, others at research, others at writing. But staying at the forefront means constantly evaluating and reconfiguring your team to maximise what’s possible.

The unique challenge is the breakneck pace of AI advancements. Every few weeks or months, new LLMs and approaches emerge that completely change the game. A release can bring what feels like superpowers compared to previous versions, often with new trade-offs.

One LLM might excel at reasoning but be slower and more expensive, making it perfect for complex planning but impractical when speed matters. Another might be faster and cheaper, making it ideal for speed-critical tasks like understanding document structure but weaker on complex and nuanced analysis.

A new LLM might collapse multiple agents into one, handling all their specialised tasks and simplifying our approach. It could also unlock previously impossible capabilities. For example, as LLMs improve at understanding documents visually, we at Orbital can use them to analyse complex property plans and architectural drawings directly, accessing critical details that were previously invisible to AI.

All of this requires building for tomorrow, not just today’s capabilities. The key is creating robust scaffolding for an agentic system that can benefit from model improvements in the coming months: flexible enough to swap members in and out, combine their strengths, and remove weak links. Success requires experimenting with new LLMs and approaches, prototyping quickly and being able to pull up and tear down approaches rapidly as the landscape shifts.

The striking reality is that LLMs are becoming so capable that the bottleneck is shifting. It’s less about what the models can do, and more about whether we’ve given them the right context and tools to do it. When you combine well-designed scaffolding with leading LLMs, you start to see the early stages of Artificial General Intelligence (AGI): when AI matches human abilities across most intellectual tasks.

To understand what makes this possible, let’s look under the hood at what an AI agent really is.

The DNA of an AI Agent

An AI agent is an LLM equipped with three essential capabilities:

- A clear goal

- Memory

- Ability to take actions (including when to stop)

Goals provide direction. Rather than just responding to prompts, agents need clear objectives to guide their reasoning and decision-making. This objective is typically defined in a system prompt: an initial instruction set that shapes the model’s behaviour and role from the outset. Whether it’s analysing legal documents for risks or researching market trends, the goal determines how the agent approaches the task.

Memory enables persistence. Agents retain key details throughout their work: the original request, their own reasoning steps, information they’ve gathered, and progress they’ve made. This persistence is crucial for maintaining coherence across complex, multi-step tasks that might involve dozens of decisions and tool uses.

Actions transform passive responders into autonomous agents. The term “agent” comes from “agency”: the capacity to act independently. While passive chatbots simply respond to prompts, agents actively deploy tools: web search engines to gather current information, APIs to retrieve specific data, internal databases to cross-reference findings, image generators to create content, and dozens of other specialised tools. Technically, an agent is an LLM running in a continuous loop with access to these tools. It chooses which tools to use, when to deploy them, interprets the results, reasons about its next move, and continues the process until it’s ready to respond. This autonomous tool use elevates agents from conversation partners to capable digital colleagues.

Consider a research scenario. An agent investigating market trends might start by searching recent news and reports online. Finding conflicting data, it queries internal sales databases to check company performance, then cross-references industry reports to understand broader patterns. When gaps remain, it might search academic papers or regulatory filings. Each step builds on the previous findings, creating in minutes a comprehensive analysis that would take a human hours to compile.

This kind of sophisticated tool use is becoming instinctive in modern models. OpenAI’s recently released o3 and o4-mini excel at seamlessly choosing the right tools, knowing when to deploy them, and reasoning through results without constant guidance. They’ve been trained not just to think step-by-step, but to act step-by-step.

The complexity these models can handle is remarkable. In one complex case, OpenAI’s o3 model made over 600 tool calls, reasoning through each output before delivering a single, coherent response. This persistent, autonomous work, combining clear goals, persistent memory, and intelligent action, illustrates the leap from ‘prompt and respond’ to independent digital workers.

But how do we quantify how autonomous leading AI agents are today?

The Autonomy Benchmark

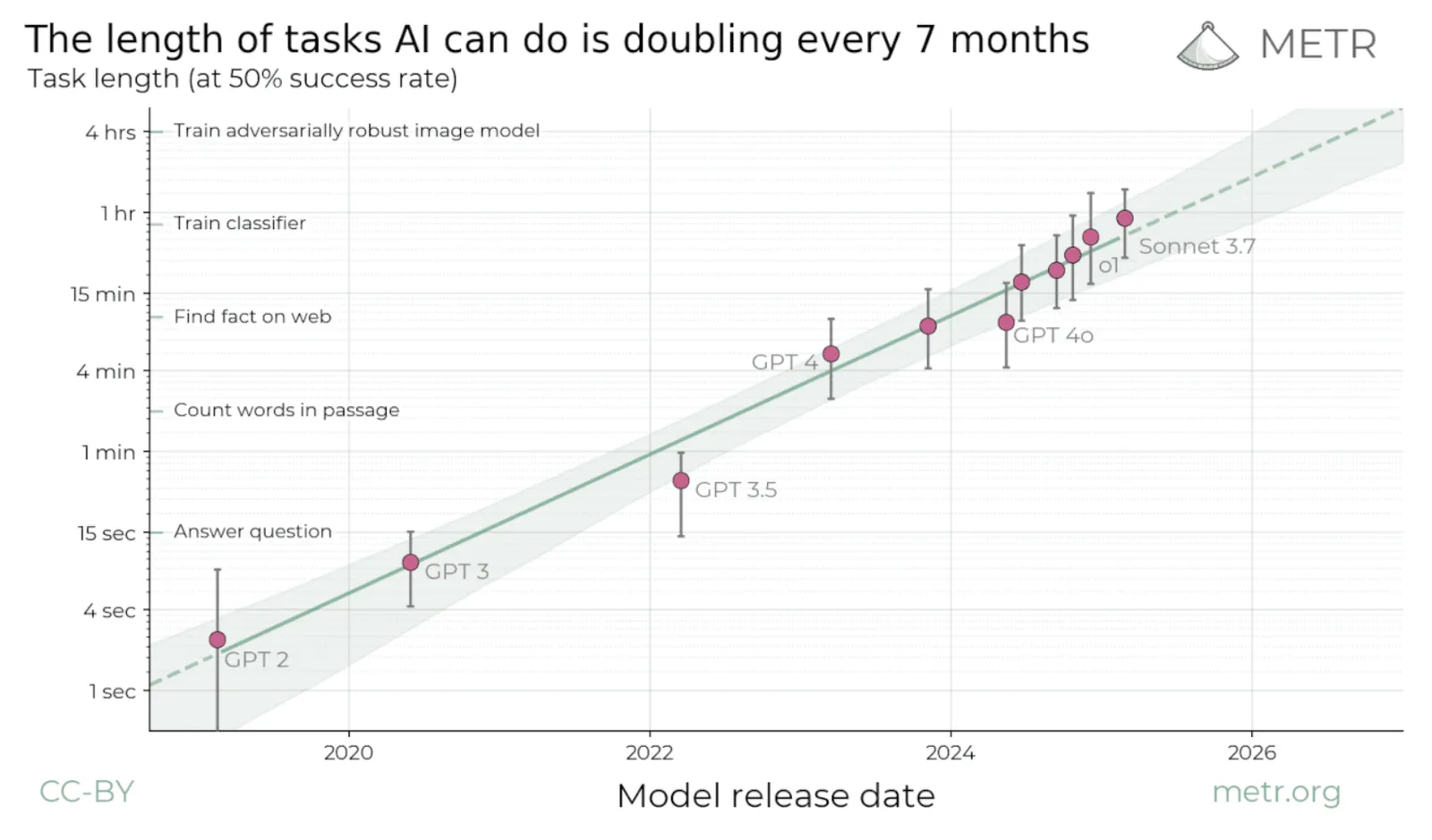

METR, an AI safety and evaluation organisation founded by a former OpenAI researcher, has introduced a compelling new way to measure real-world AI autonomy: task length.

Task length reflects how long it would take a human expert to complete the same task. METR gathers this data by having human professionals perform complex, multi-step reasoning tasks that are also given to the AI.

The results are remarkable. Today’s leading AI can independently complete tasks that would otherwise take a human expert around 20 minutes, but does so in just seconds or a few minutes. Furthermore, METR has found that task length roughly doubles every seven months. In seven months, agents could handle 40-minute tasks. Seven months later, nearly 90-minute ones.

What’s truly staggering is that, assuming the trends of the last six years continue, in four years frontier AI will handle tasks that would otherwise take an expert a month. In five to ten years, tasks that would take an expert a full year.

Frontier-model autonomy is doubling roughly every seven months (METR data).

Applicability will vary by job, but the signal is clear: autonomy is accelerating. Imagine handing off a project that would take an expert human a year and getting it back in days or weeks: faster, cheaper, better.

We’re hiring

If you’re interested in solving hard problems in the legal space by building and leveraging agentic AI systems, check out our open roles and get in touch via our Careers Page. Even if nothing listed quite matches your experience, feel free to connect and message our CTO, Andrew Thompson, directly on LinkedIn. He’s always happy to have a casual chat over video or coffee.